So that’s it! Thanks for reading and feel free to leave any comments/questions below. 50 13 * * 4 cd /home/stefang/ & /usr/bin/Rscript -e "rmarkdown::render('')" 00 18 * * 4 cd /home/stefang/ & /usr/bin/bash write_to_bucket.sh Third, I configured Crontab to run the script every Thursday at 1:50 pm, preceded by the bash script at 6:00 pm (this gave me enough time in case the report took longer). This is intended for those who have some experience with R and with Jupyter notebooks, and.

#!/bin/bash cd /home cd stefang/ gcsfuse -implicit-dirs gcs-bucket/ sudo mv /output/ gcs-bucket/ #unmount GCS bucket fusermount -u gcs-bucket Objectives This tutorial shows you how to get started with data science at scale with R on Google Cloud. Second, I created a bash script that would change current directories, mount a GCS bucket, and upload my script’s output files (several CSVs and an HTML map file) to the bucket. The full process of setting this up also involves Cloud Functions and Pub/Sub. Cloud Scheduler allows us to limit our usage of our VM, keeping costs low and reducing any wasted energy. There were a few steps required for this task, which I will briefly sum up:įirst, I set up Cloud Scheduler to start/stop my instance at specific times each week.

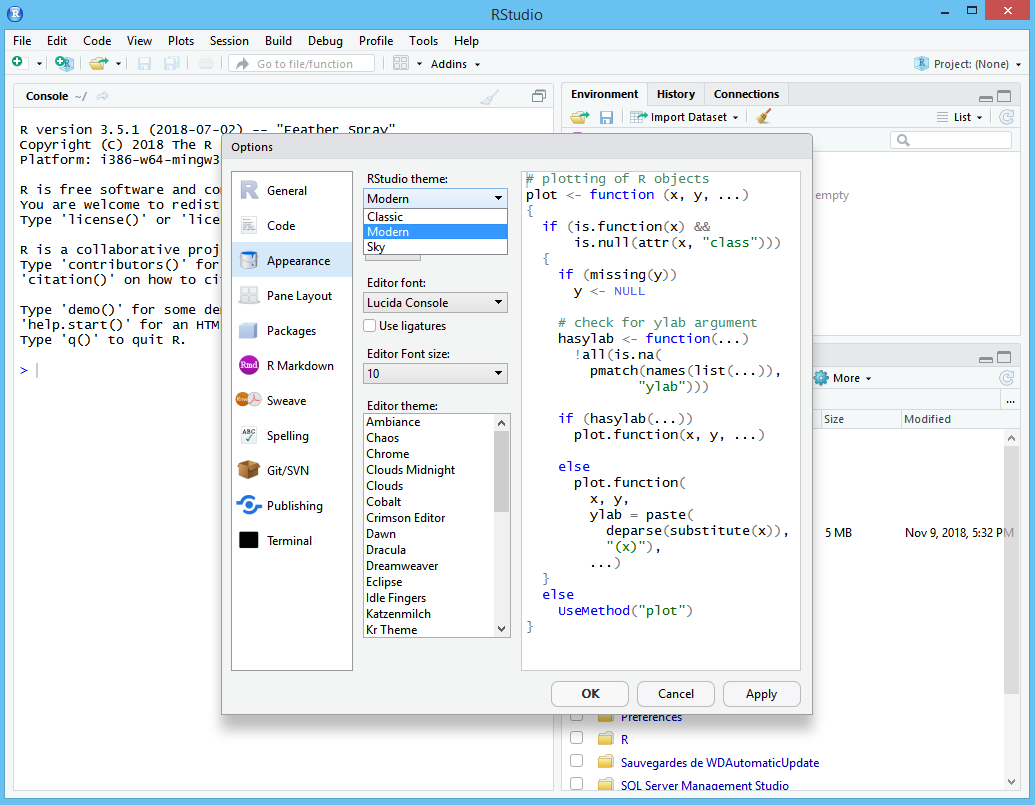

The library should then launch a browser window and ask you to login to Google - log in. Once you are logged in, issue the following two R commands in the R console bottom-left: library ( googleAnalyticsR) gaauth () Select 1: Yes to say you wish to keep your OAuth access credentials. I wanted to fully automate this process so that, unless I had new code to push, the script would run without me having to SSH into the instance. Click this link and log in to RStudio Cloud to try it out. From this new directory, we can access all of our files (including any outputs from the script) via the shell.

0 kommentar(er)

0 kommentar(er)